In our continuing series on Large Language Models (LLMs), today we delve into an essential aspect of these advanced AI systems: security. As LLMs become more integral to our daily digital interactions, ensuring their security is paramount. In this installment, we explore the potential threats, vulnerabilities, and protective measures associated with LLMs, aiming to provide a comprehensive understanding of how to safeguard these powerful tools.

Ensuring Security in Large Language Models: Protecting the Integrity of AI

Understanding the Security Landscape in LLMs

Large Language Models have revolutionized the field of artificial intelligence, offering unprecedented capabilities in understanding and generating natural language. However, their complexity and the vast amount of data they handle also introduce significant security risks. Addressing these risks is critical to maintaining the trust and reliability of AI systems.

Threats and Vulnerabilities

- Adversarial Attacks Adversarial attacks involve manipulating input data to deceive LLMs into producing incorrect or harmful outputs. These attacks can undermine the reliability of AI systems, causing them to generate misleading information or perform unintended actions.

- Data Poisoning Data poisoning occurs when malicious actors inject harmful data into the training datasets of LLMs. This can lead to biased or erroneous model behavior, compromising the integrity of the AI’s outputs.

- Information Leakage Given the extensive training data used by LLMs, there is a risk of unintentional leakage of sensitive information. Ensuring that models do not inadvertently disclose private or proprietary data is a critical security concern.

Protective Measures

- Robustness and Defense Techniques

Implementing techniques such as adversarial training, where models are trained on adversarial examples, can enhance the robustness of LLMs against attacks. Additionally, using methods like differential privacy can help protect against data leakage. For a comprehensive list of best practices and security measures specifically for LLM applications, refer to the OWASP Top 10 for Large Language Model Applications. - Security Testing and Validation

Regular security audits and penetration testing are essential to identify and mitigate vulnerabilities in LLMs. Employing automated tools for continuous monitoring can also help in maintaining the security posture of these models. - Privacy Policies and Compliance

Adhering to strict privacy policies and regulatory standards ensures that LLMs are used responsibly. This includes implementing data minimization practices and ensuring compliance with data protection laws such as GDPR.

Case Studies and Practical Examples

To illustrate the importance of security in LLMs, let’s consider a few real-world examples:

- OpenAI’s GPT-3: Despite its advanced capabilities, GPT-3 has faced issues with generating biased or harmful content. OpenAI has implemented various safety mitigations, including fine-tuning and user feedback mechanisms, to address these challenges.

- Microsoft’s Tay: An earlier example, Microsoft’s Tay chatbot, was manipulated by users to produce offensive language. This incident highlighted the need for robust security and moderation mechanisms in interactive AI systems.

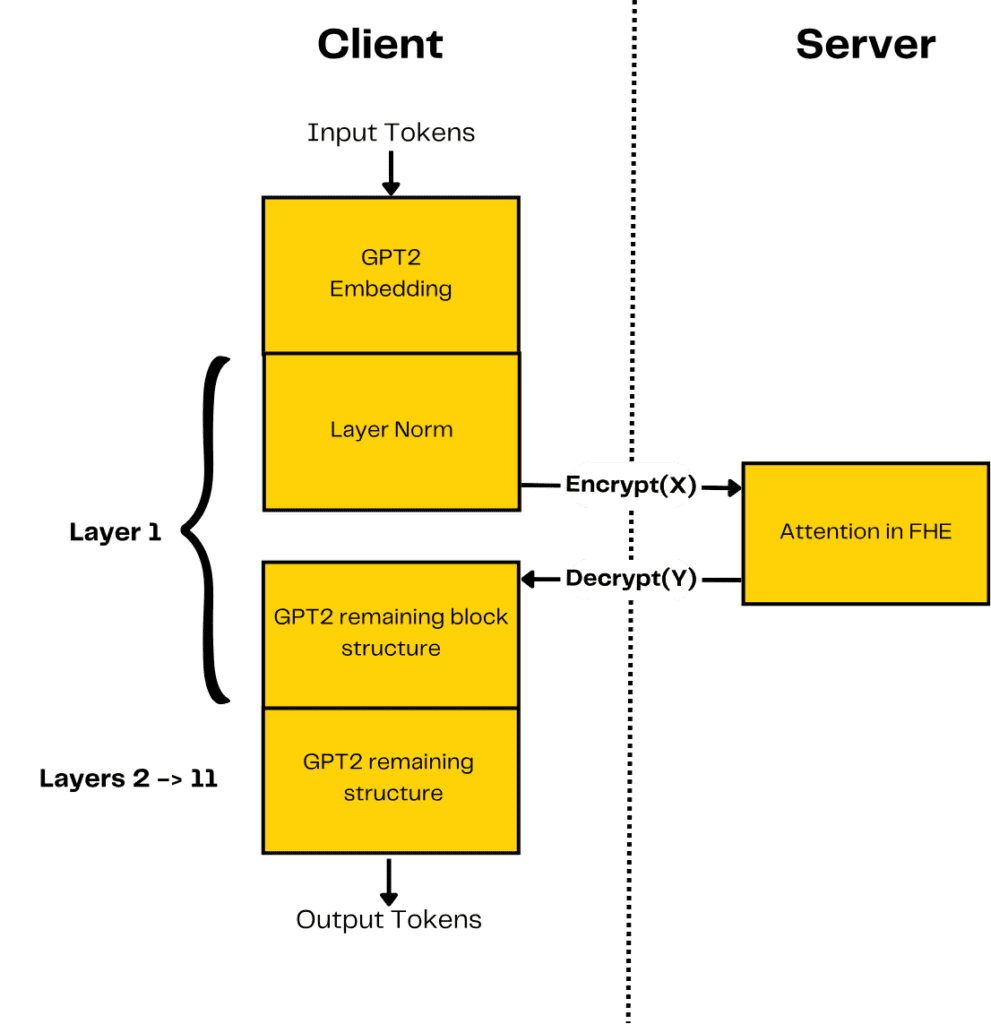

- Homomorphic Encryption Implementation: Zama has demonstrated how homomorphic encryption can protect both the intellectual property of the model and the privacy of user data during the use of LLM (Lakera).

- Secure Multi-Party Computation (SMPC): This technique allows multiple parties to perform joint calculations without sharing their private data, ensuring confidentiality throughout the process (Experts Exchange).

Future Directions and Challenges

As the field of AI continues to evolve, so do the security challenges associated with LLMs. Ongoing research focuses on developing more sophisticated defenses, such as improving model interpretability to better understand and mitigate risks. However, significant challenges remain, including balancing security with model performance and ensuring the ethical use of AI.

In conclusion, Security in Large Language Models is a multifaceted challenge that requires continuous attention and innovation. By understanding the potential threats and implementing robust protective measures, we can harness the power of LLMs while safeguarding their integrity and reliability. In our upcoming publications, we will explore specific security techniques and delve deeper into the ethical considerations surrounding LLMs.

Stay tuned for our next article, where we will discuss the role of ethical AI in developing and deploying Large Language Models.

Izan Franco Moreno